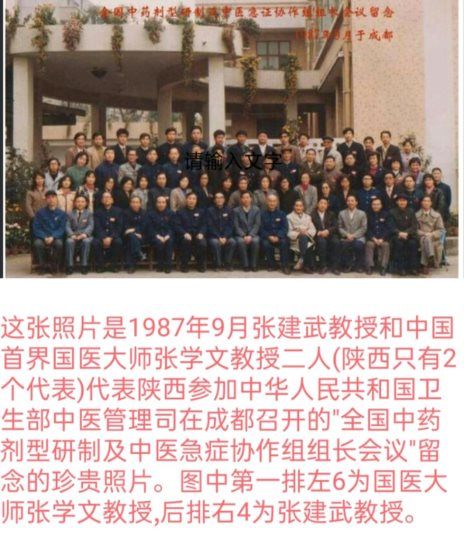

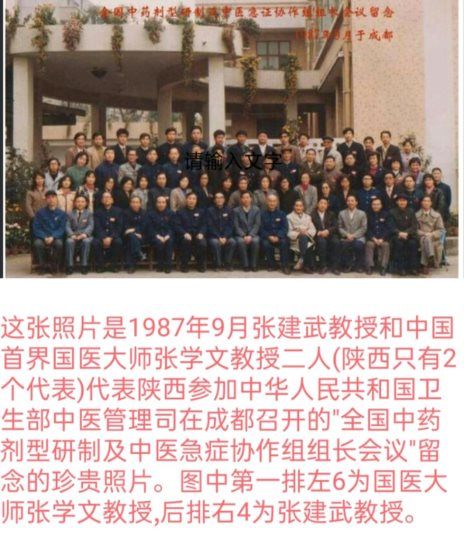

He is not a master of traditional Chinese medicine, but he has a similar or even deeper life experience. In September 1987, while working in the Affiliated Hospital of Shaanxi College of Traditional Chinese Medicine, he was named as the first master of traditional Chinese medicine in China in 2009 (there were only two representatives in Shaanxi Province at that time, Professor Zhang Jianwu, one of them, represented Shaanxi Province in the "Meeting of the Head of the First Chinese Medicine Formulation Development and Chinese Medicine Emergency Cooperation Group" held in Chengdu, Sichuan after the reform and opening up of the Department of Traditional Chinese Medicine of the Ministry of Health of the People’s Republic of China. Just because he didn’t want to be westernized, he left the system prematurely, resigned from public office and returned to the people, and he became a real folk Chinese medicine now, otherwise his future would be incalculable. Many of his classmates who are still in the system are either tutors or professors, deans or national famous doctors, or famous experts in Chinese medicine at home and abroad, but he has become the most grounded and out-and-out folk Chinese medicine at an early date, because he always recognizes that Chinese medicine is rooted in the people, and no famous Chinese medicine has come from a hospital since ancient times.

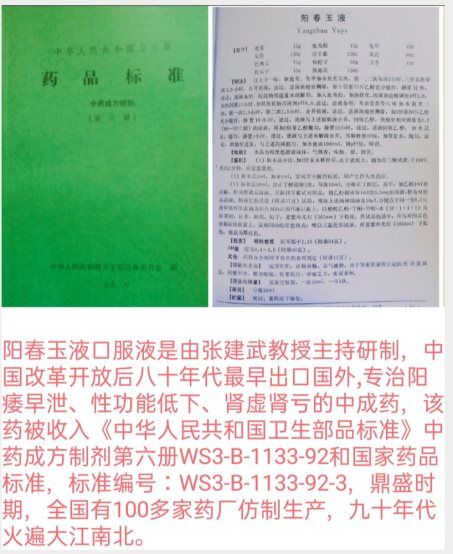

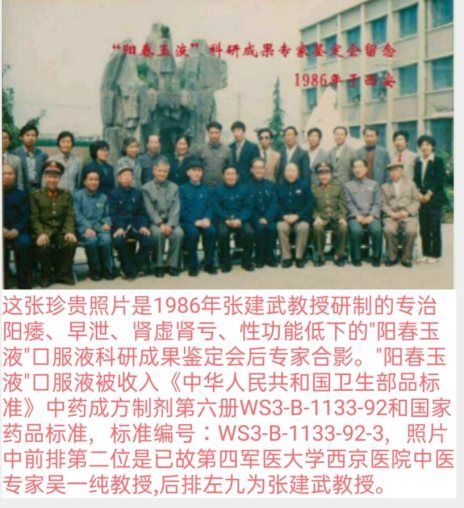

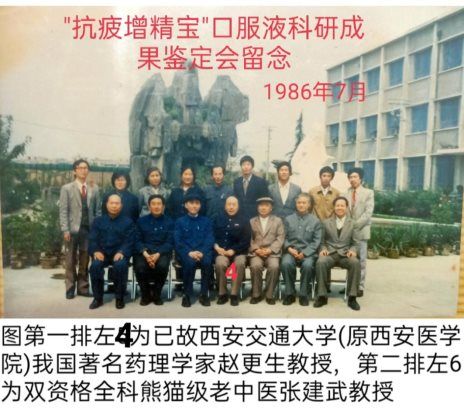

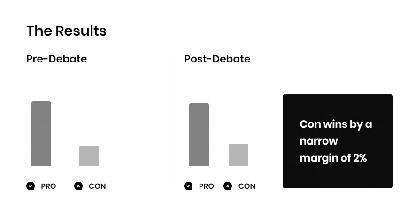

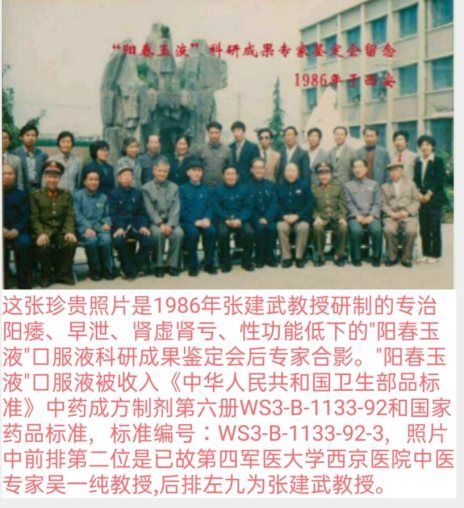

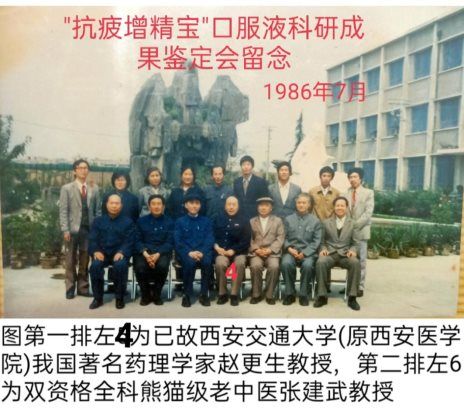

He is not a drug king, but he invented the proprietary Chinese medicine "Yangchun Yuye" in the 1980s, an old national-level Chinese patent medicine with impotence, premature ejaculation, kidney deficiency and low sexual function, which was exported abroad in 1986. It was the first Chinese patent medicine exported abroad to treat kidney disease in China after the reform and opening up, and it was also one of the first eight kinds of Chinese patent medicines exported abroad after the reform and opening up in Shaanxi Province, and "Yangchun Yuye" oral liquid had already been accepted λ The sixth volume of prescription preparation of traditional Chinese medicine in the Drug Standard of the Ministry of Health of the People’s Republic of China WS3-B-1133-92, at its peak, dozens of pharmaceutical factories in China moved to the Ministry of Health, which had obvious curative effect on impotence, premature ejaculation, sexual dysfunction and infertility with little side effect. There is also an oral medicine called "anti-fatigue and essence-enhancing treasure", which is specially used to treat male and female five-strain and seven-injury, qi and blood deficiency, spleen and kidney deficiency, and yin and yang deficiency. The other six kinds are ancient famous prescriptions, and only two kinds are invented by modern people, all of which are prescriptions of Professor Zhang Jianwu. Among them, "Yangchun Yuye" oral liquid is still being produced by many pharmaceutical companies in China, and it is called "natural Viagra" in Chinese medicine by patients.

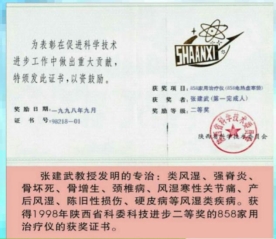

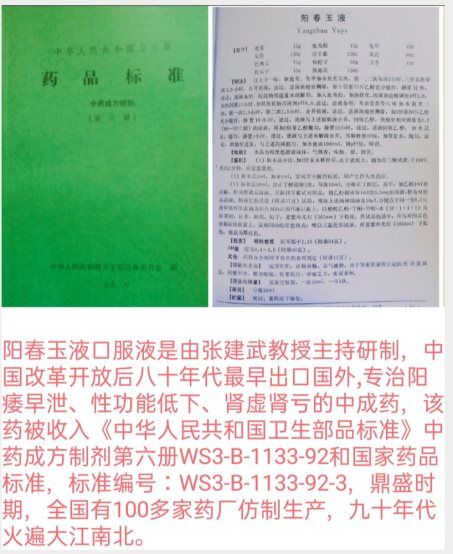

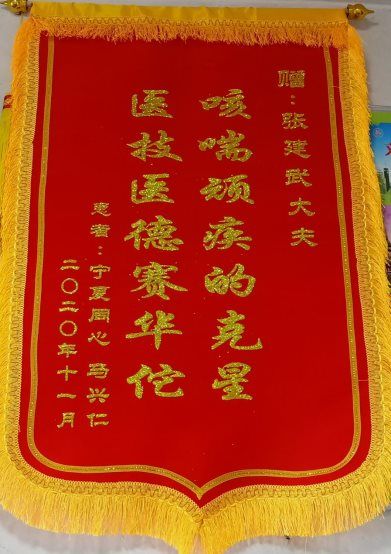

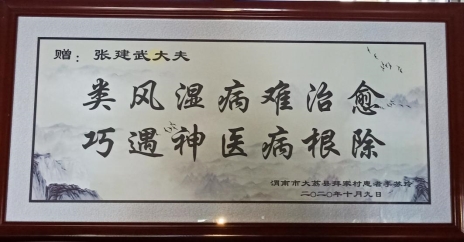

He is a Chinese medicine practitioner, but he is not an ordinary Chinese medicine practitioner. In the early 1990s, he developed the "858 home rheumatism therapeutic instrument combined with 858 Xuhanling physiotherapy synergistic liquid to treat rheumatism, rheumatoid diseases, hyperosteogeny, neuritis, sciatica, postpartum rheumatism and other rheumatism diseases, which was a pioneer in the new method of treating rheumatism diseases by introducing medical equipment and liquid Chinese medicine, and has been popular at home and abroad for decades. Won the second prize of scientific and technological progress of Shaanxi Science and Technology Commission in 1996, and was awarded the title of "National Consumer Trusted Product" in 1993. Later, due to the reform of the national pharmaceutical system, medical devices had to be re-examined separately from drugs, and multi-layer management required millions or even tens of millions of investments. It was not necessarily possible to approve and continue production. In order not to increase the burden on patients, he reluctantly gave up the regret of continuing production. So that twenty years later, patients often take the 858 rheumatism therapeutic instrument that is about to enter the museum to ask him to see rheumatism everywhere. Many patients feel that this is the case. Such a magical product has disappeared in the market, and even similar products are not available. He can only say with a wry smile that this is the result of the westernization of Chinese medicine, the wrong direction of the national Chinese medicine policy, and there is nothing that individuals can do.

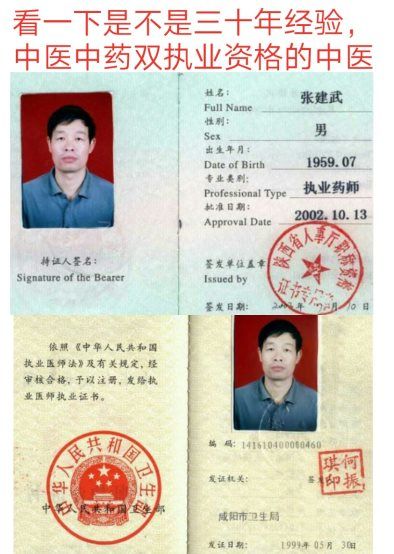

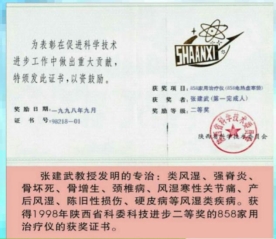

He is a Chinese medicine practitioner. He is not a pure Chinese medicine practitioner, nor a westernized Chinese medicine practitioner who has been subdivided. Instead, he is a general practitioner who has been qualified for both Chinese medicine and Chinese medicine for 30 years, and is best at treating rheumatic diseases, chronic nephropathy, chronic intractable diseases and complex sub-health conditioning. He is a Chinese medicine miracle who is truly proficient in Chinese medicine and Chinese medicine as an ancient Chinese medicine master, and comes from the people and has returned to the people after further study and work in the College of Traditional Chinese Medicine.

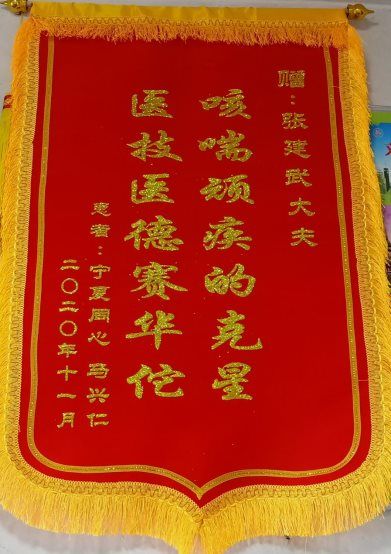

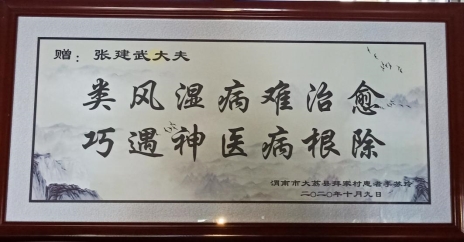

He is known as "Zhang Dana", the contemporary "King of Medicine" and "Hua Tuo’s reincarnation". "He has a good way to treat diseases, a good way to treat diseases, and he does not find a cure. He is best at improvising, varies from person to person, and is good at treating kidney deficiency, kidney deficiency, impotence, premature ejaculation, chronic nephritis, renal failure and other kidney diseases, rheumatoid diseases, and myelitis with traditional Chinese medicine.

Professor Zhang Jianwu, male, 62 years old, has been a doctor for 45 years. He was born on July 10, 1959 in Xingping City, Shaanxi Province. When Professor Zhang Jianwu was 16 years old (in 77 years), he was accepted as an apprentice by Zhang Dingming, an old Chinese doctor who graduated from the private Shanghai College of Traditional Chinese Medicine in the late Qing Dynasty and was the best at treating intractable diseases. He learned from his teacher and learned from it. In addition, his father was sick and weak since childhood. Zhang Dingming, an old doctor of traditional Chinese medicine, was famous in Shaanxi before liberation. After liberation, he was sentenced to 20 years in prison for being a rightist. After his release in 77 years (when he was nearly 80 years old), many incurable patients were cured. The people who sought medical treatment were crowded. What impressed Zhang Jianwu most was that: First, cars often visited the village to see Dr. Zhang Dingming; Second, because of his illness, his father especially believes that Chinese medicine often sees Dr. Zhang Dingming. Third, Zhang Moumou, the adopted daughter of Dr. Zhang Dingming, suffered from leukemia. When Dr. Zhang Dingming was alive, Zhang Moumou leukemia had been cured. Zhang Moumou, the adopted daughter of Dr. Zhang Dingming, had not been ill for more than ten years after his death. Zhang Dingming left a prescription for her adopted daughter Zhang Moumou to keep well. If leukemia was committed in the future, she would take this prescription. Later, Zhang Moumou, the adopted daughter of Dr. Zhang Dingming, lost the prescription, and leukemia recurred and died in the 1990s.

In his youth, Professor Zhang Jianwu was deeply influenced by Zhang Dingming, an old Chinese medicine doctor, who thought that the old Chinese medicine doctor could connect the mind, integrate medicine and the harmony between man and nature. Dr. Zhang Dingming felt the pulse of Professor Zhang Jianwu and said: You can enter the university and you are expected to learn Chinese medicine in the future. You can come whenever you want, and "Erbo" will teach you well. I don’t want my medical skills to be lost. I must remember what I should remember at the beginning of learning Chinese medicine, and I must recite what I should recite. To learn Chinese medicine, we must learn Chinese medicine and traditional Chinese medicine together, learn more about the pulse theory, be familiar with the medicinal properties, know more about authentic Chinese medicine, learn to make Chinese medicine in the same way, make good use of the advantages of the library of the College of Traditional Chinese Medicine after being admitted to the university, read more and remember more medical words, medical records and prescriptions of some famous old Chinese medicine practitioners, read prescriptions without memorizing a few dead prescriptions, make clear the cubic principle of ancient masters, and remember the nature and prescription of each Chinese medicine when learning Chinese medicine. … And so on ",Professor Zhang Jianwu remembers them one by one, and strictly follows them for decades. Although many times they are not understood, Professor Zhang Jianwu never relaxes.

In 1980, Professor Zhang Jianwu finally became the third batch of college students after the college entrance examination system was restored, and was admitted to Shaanxi College of Traditional Chinese Medicine. At that time, when he volunteered, he identified three words as "learning Chinese medicine". When he volunteered, he only reported to two departments of Shaanxi College of Traditional Chinese Medicine (Chinese medicine, Chinese medicine). As a result, he was admitted to the Department of Traditional Chinese Medicine. Dr. Zhang Dingming, his mentor, told Professor Zhang Jianwu that universities mainly rely on self-study, and books learn fur. The master introduced the door and practiced in. At the same time, read more medical books, consult more experienced old pharmacists of traditional Chinese medicine, and turn the library of the College of Traditional Chinese Medicine upside down before applying it to practice. Since then, Professor Zhang Jianwu has made full use of all available spare time to learn Chinese medicine and traditional Chinese medicine. Because of this, his classmates have nicknamed him "unilateral prescription", because he has read nearly 600 books three times a week and made reading notes in four years.

In July, 1984, Professor Zhang Jianwu graduated from Shaanxi College of Traditional Chinese Medicine with excellent results. After rigorous trial lecture, examination and screening, he was kept in school. However, he felt that the affiliated hospital of Shaanxi College of Traditional Chinese Medicine was the most grounded, and Chinese medicine was a practical natural science. After arriving at the affiliated hospital, Professor Zhang Jianwu was assigned to the Chinese medicine research room after taking medicine from the Chinese medicine pharmacy for one year and practicing in each department for a period of time. Mainly engaged in clinical Chinese medicine work, participating in clinical rounds, difficult and serious consultations, developing new drugs, identifying the authenticity of traditional Chinese medicine, and researching the quality of traditional Chinese medicine. All these made Professor Zhang Jianwu feel at home, and he had the opportunity to learn from many old Chinese medicine practitioners in the College of Traditional Chinese Medicine and study traditional Chinese medicine, laying a solid foundation for becoming a compound Chinese medicine expert in the future. The first patient treated by Professor Zhang Jianwu was a retired Commissioner in Shangluo, who lived with his son in Guomian No.1 Factory. My son worked in Xingping Fertilizer Plant. At that time, Dean Jin Binggan of Xinghua Hospital was studying in the affiliated hospital of Shaanxi College of Traditional Chinese Medicine and lived in the same dormitory as Professor Zhang Jianwu. One day, Dean Jin Binggan told Professor Zhang Jianwu that his colleague’s father was dying of heart failure and had been hospitalized in the internal medicine department of the affiliated hospital of College of Traditional Chinese Medicine. He told the patient’s son how bad you were and recommended you. On hearing this, Professor Zhang Jianwu was afraid and happy. That’s a difficult and serious disease that the old professors in the affiliated hospital of Shaanxi College of Traditional Chinese Medicine can’t cure, but because they were young and victorious at that time, they agreed at once. After seeing the patient, the original patient had various senile diseases such as Parkinson’s disease and hemiplegia.After feeling the pulse and looking at the tongue, when Professor Zhang Jianwu returned to the dormitory at night, he didn’t have a rest and looked up the information. He opened his previous notes on heart failure, and suddenly saw that the Sino-Japanese Friendship Hospital had a good effect on treating heart failure with a large dose of aconite root, which coincided with the patient’s symptoms and signs. Professor Zhang Jianwu first determined that the treatment method of benefiting the temperature and yang, promoting blood circulation and promoting diuresis was 60g (only 7-10g in general hospitals). Then, a prescription was made of traditional Chinese medicine which was consistent with TCM syndrome differentiation and treatment, and at the same time, pharmacological research could strengthen the heart. Among them, 30g of ginseng (10g for general doctors) and 10~30g of astragalus (10-30g for general doctors) were taken for three doses. The patient’s edema subsided and he was able to get out of bed and walk. The patient immediately asked to leave the hospital. Professor Zhang Jianwu suddenly felt that he was really a wonderful doctor, and his courage became greater from then on. The second patient treated by Professor Zhang Jianwu was the foster mother who was given to someone else’s son by his uncle when he was a child. He was suffering from a liver tumor (at that time, due to conditions, only B-ultrasound was performed). At that time, the patient’s abdomen was as big as a drum, and he was seriously ascites. He was cured by Professor Zhang Jianwu for half a year. Ten years later, he saw his uncle and his son said that his foster mother’s illness had not recurred until she died.

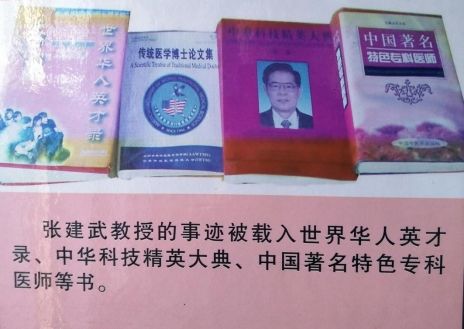

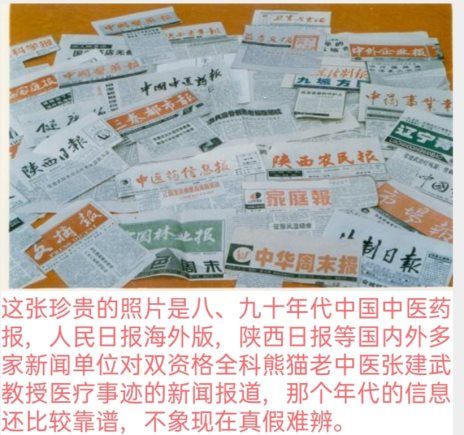

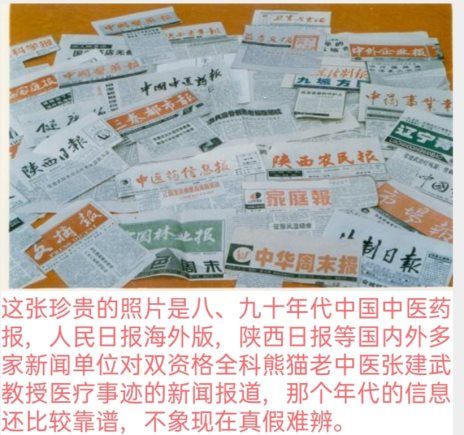

In 1985, Professor Zhang Jianwu developed the first new drug "Anti-fatigue and Enriching Essence" oral liquid, which was specially used to treat men and women with five injuries and qi and blood deficiency. It caused a sensation in the whole Shaanxi College of Traditional Chinese Medicine at that time. Even Professor Zhang Xuewen, then president and now the youngest master of traditional Chinese medicine in China, said, "Zhang Jianwu is a talent of our College of Traditional Chinese Medicine", and the second new drug developed by Professor Zhang Jianwu was "Yangchun Yuye" oral liquid, which was specially used to treat impotence, premature ejaculation and sexual dysfunction. These two new drugs are two of the first eight kinds of Chinese patent medicines exported to foreign countries in Shaanxi Province in 1986 after the reform and opening up. Among them, "Yangchun Yuye" was included in the drug standard issued by the Ministry of Health, and there are still dozens of pharmaceutical factories in the country for more than 30 years now. Lv Moumou, a patient in Xingping Dianzhang, suffered from sexual impotence, and his husband and wife divorced disharmonously. After two marriages, he was in danger of divorce. He was completely cured by taking "Yangchun Yuye" oral liquid invented by Professor Zhang Jianwu for three courses. The third new drug developed by Professor Zhang Jianwu in 1986 was "Zhuangyuanchun", which was specially used to treat infertility and kidney deficiency, and won the third prize of scientific and technological progress of Shaanxi Provincial Administration of Medicine. Zhang, a transportation company in Xingping City, had no children after marriage for many years, and successfully gave birth after taking "Zhuangyuanchun" oral liquid invented by Professor Zhang Jianwu for two courses. Because of Professor Zhang Jianwu’s great contribution to traditional Chinese medicine, in 1987, Professor Zhang Jianwu and now Professor Zhang Xuewen, the first master of traditional Chinese medicine in China (the first in five northwestern provinces) (there are only two in Shaanxi, and Professor Zhang Jianwu is one of them) represented Shaanxi in the "National Meeting of Chinese Medicine Formulation Development and Chinese Medicine Emergency Cooperation Group Leaders" held by the Ministry of Health in Chengdu.In 1988, Professor Zhang Jianwu cooperated with Professor Guo Chengjie, the second master of traditional Chinese medicine in China (the second in Shaanxi Province), who is now 96 years old, to complete the scientific research project of "Rule Oral Liquid" for treating women’s mammary gland hyperplasia; In 1990, Professor Zhang Jianwu’s "858 Rheumatology Therapeutic Apparatus Combined with Different Kinds of Physiotherapy Synergistic Liquid" for treating rheumatic diseases won the second prize of Shaanxi Science and Technology Commission for its remarkable curative effect, and the products were trusted by consumers all over the country. More than 50 news organizations in China reported the deeds of Professor Zhang Jianwu in detail. Professor Zhang Jianwu’s medical deeds were also recorded in "World Talented Chinese", "China Medical Celebrity" and "China Famous Specialist", and Professor Zhang Jianwu himself was also admitted to the United States.

Wang Kangmou, a king patient in Huxian County, Xianyang, suffered from old cold legs for more than 30 years when he aided the United States and aided Korea. Professor Zhang Jianwu invented "858 rheumatism therapeutic instrument combined with 858 deficiency cold spirit Chinese medicine infiltration, which was completely cured after five courses of hot compress physiotherapy". At the same time, the longer he stayed in the hospital, the more blind Professor Zhang Jianwu became. The over-westernized Chinese medicine practitioners, the separated Chinese medicine practitioners, the subdivided Chinese medicine practitioners and the stereotyped Chinese medicine practitioners made him realize that the Chinese medicine practitioners in big hospitals are no longer ancient Chinese medicine practitioners. The big hospitals have completely lost the soil for the survival of real Chinese medicine practitioners, and Chinese medicine practitioners are dying. So he made a bold decision, resigned from public office, sank the people, and became the most grounded contemporary Sun Simiao, away from fame and fortune. Professor Zhang Jianwu told the reporter, "The real Chinese medicine is in the folk, and the root of Chinese medicine is at the grassroots level (many old professors and masters of Chinese medicine in most famous traditional Chinese medicine colleges today are selected from the folk and grassroots in their early years). Chinese medicine is a craft. Practice makes perfect. Don’t take the special allowance of the State Council for teaching experts too seriously. In today’s society, the folk customs are far worse than in the past. There are too many nominal Chinese medicine practitioners. Don’t think that Chinese medicine is everywhere. Western medicine is developed in big cities, and it makes a lot of money to travel to the west. In all likelihood, several Chinese medicine practitioners have also been westernized. This kind of Chinese medicine practice is not as good as that of a grassroots Chinese medicine practitioner for several years. Li Ke, a late old Chinese medicine practitioner in Shanxi, can be regarded as one.

Professor Zhang Jianwu is good at treating rheumatoid diseases, postpartum rheumatism, hyperosteogeny, sciatica, ankylosing spondylitis, scapulohumeral periarthritis, cervical spondylosis, disc herniation, gout, rheumatic cold joint pain, osteonecrosis and other rheumatic diseases, infertility, kidney deficiency, impotence and premature ejaculation, hypertensive nephropathy, diabetic nephropathy, chronic nephritis and other nephropathy, sub-health conditioning, chronic diseases and various intractable diseases. The outpatient clinic he opened belongs to the special needs clinic of chronic incurable diseases experts of traditional Chinese medicine, not to the general outpatient clinic. Most of the patients who come to see the doctor are incurable and lose confidence. Moreover, many patients have as many as ten kinds of incurable diseases, and they can’t even find that subject in the hospital. They often find a "famous doctor". This disease is cured lightly and another disease is cured seriously, which is equal to no treatment. In order to ensure the treatment effect, He only sees two or three patients for the first time every day, and he must make an appointment one week in advance, and he doesn’t see one more patient, but a patient usually needs to take traditional Chinese medicine for more than two months. He follows the principle that once he finds a chronic disease, he must abide by the law. The biggest difference between Professor Zhang Jianwu and other colleagues is that the patients who come to him for treatment are not only seeing a disease, especially the first-time patients. He will spend more than an hour teaching patients the law of drug differentiation that doctors need to master in the treatment process without reservation, so that patients can become half Chinese medicine practitioners, master the law of cold and heat changes by themselves, and adjust the cold and heat properties of prescriptions by themselves, thus achieving the therapeutic effect of getting twice the result with half the effort. Second, seeing a doctor is a comprehensive conditioning.Resolutely treat the head without headache, and the foot with pain. He often said that the current Chinese medicine is different from that in ancient times. Thirty years ago, his medical treatment was very different from that now, and his illness has changed long ago. Shanxi has been named Yun Li Keshan to treat critical and severe diseases and incurable diseases. That was at the grass-roots level in the early 1980s when there were few doctors and medicines. At present, several critically ill patients sought Chinese medicine. At that time, I often used 100g of aconite and 100g of ginseng to treat heart failure, but now it is rarely used. Nowadays, there are many miscellaneous diseases of cold and heat. In the early 1990s, I treated more single diseases of intractable diseases. Now, there are more complex diseases. Due to the changes of people’s living environment, life concept and social environment, the diseases of intractable diseases have already undergone earth-shaking changes. Now, patients seeking Chinese medicine are either chronic diseases for health care, or eight or nine of the intractable diseases, simple diseases and acute diseases, which can’t be cured by "famous doctors" in big hospitals. I’m afraid of the trouble of frying Chinese medicine, and I’m afraid that Chinese medicine is too bitter and too bad to drink. People who can find Chinese medicine are all dead, and there is no way out. I’m asking the people to find good doctors, but it used to be that there are nine out of ten Chinese medicine practitioners who should be good Chinese medicine. Because there are too many quacks, the long-term treatment is ineffective, and the people gradually don’t believe in Chinese medicine, because nine times out of ten they meet fake Chinese medicine practitioners and eat them. Fortunately, President Xi is really great, and it is hopeful to revitalize Chinese medicine. However, it is so easy for Chinese medicine practitioners who have gone the wrong way for decades to turn back, and it will be good to be truly prosperous after 20 years. Now it is just to set things right.Equivalent to the initial stage of reform and opening up. With the dual qualifications of practicing Chinese medicine practitioners and practicing pharmacists, and rich clinical experience of more than 30 years, I have been in contact with incurable and incurable patients since I was young, and some patients, even famous traditional Chinese medicine practitioners, can’t cure their own diseases, and have accumulated rich practical experience, which leads to Professor Zhang Jianwu’s boldness and often achieves therapeutic effects that many famous traditional Chinese medicine practitioners can’t achieve at present.

Zhou Moumou, an employee of Northwest China No.2 Factory, suffered from intractable oral ulcer. He was treated in Xijing Hospital for nearly ten years, and then suffered from cerebral infarction, rheumatoid disease and hepatitis B. He was treated in Shaanxi College of Traditional Chinese Medicine for a long time, and the effect was not obvious. After patient referral, he found Professor Zhang Jianwu, and Professor Zhang Jianwu prescribed medicine for three months at a time. The first visit took two hours. After taking it, the oral ulcer recovered, rheumatoid disease and cerebral infarction were much lighter, and he continued to treat rheumatoid disease and cerebral infarction for more than two years. Weicheng District Power Supply Bureau, Zhang Moumou, a patient in Zhengning, Gansu Province, and other patients with ankylosing spondylitis sought medical treatment everywhere, but all of them were cured by Professor Zhang Jianwu and did not recur for many years. Wei Moumou, a family member of Weicheng Oil Company, was cured of rheumatoid disease by Professor Zhang Jianwu in 1997, and suffered from intestinal cancer in 2010. He has been treated by Professor Zhang Jianwu for five years and his condition has not worsened. In the eastern suburb of Xi ‘an, a patient named Liu suffered from bronchial asthma for 7 years when he was 7 years old. He was cured in many large hospitals in Xi ‘an for a long time. His mother, father, grandfather, uncle and aunt were mostly doctors or pharmacists, and some of them were very famous. After being cured by Professor Zhang Jianwu for many years, he has been a sophomore now. Qin Moumou, Qishan County, Baoji City, Shaanxi Province, suffered from leukemia, and the major hospitals in Xi ‘an failed to cure it for a long time. Finally, the dead horse was treated as a living horse doctor, and Professor Zhang Jianwu was found through a friend’s introduction. Xu only took a course of traditional Chinese medicine, and the patient’s white blood cell changed from 1.3 to 3.9, which was close to normal. 4. Many patients who were sentenced to death by countless hospitals like this were cured by Professor Zhang Jianwu. In order to facilitate more patients to seek medical treatment,As early as twenty years ago, Professor Zhang Jianwu, a patient with inconvenient distance, started a one-to-one remote diagnosis and treatment clinic. As long as the patient strictly follows the requirements of Professor Zhang Jianwu, the curative effect is no worse than that of face-to-face diagnosis, even better than that of face-to-face diagnosis, which is twenty years earlier than the Internet remote diagnosis and treatment in Tianchang. Patients who need remote diagnosis and treatment must be patients with chronic and intractable diseases, but they must have the upper and lower photos of tongue diagnosis taken by high-pixel mobile phones in natural light in the morning and the results of TCM physical fitness tests carefully tested. Then, after filling out the detailed consultation form of Professor Zhang Jianwu on chronic and intractable diseases of TCM and sending it to Professor Zhang Jianwu’s WeChat together with the recent hospital examination results, if necessary, they must go through the video consultation of Professor Zhang Jianwu before sending the medicine.

[Disclaimer]This article only represents the author’s personal views, and the copyright of its pictures and contents belongs only to the original owner. If you claim the rights and interests of this content, please let us know by letter or email, and we will take measures quickly, otherwise we will not bear any responsibility for the disputes related to it.